CDR Technology and Its Effects on Integrity Loss

Transferring data from one point to another inherently runs the risk of integrity loss; it’s the nature of the beast. Especially in the realm of high-speed communications, numerous factors — signal noise, static spikes and more — can present obstacles to data transmission.

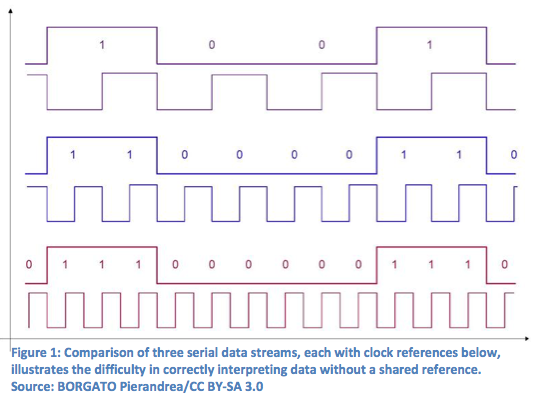

One of the more common challenges is getting both the transmitter and the receiver on the same page in terms of signal timing — which can be more complex than it sounds.

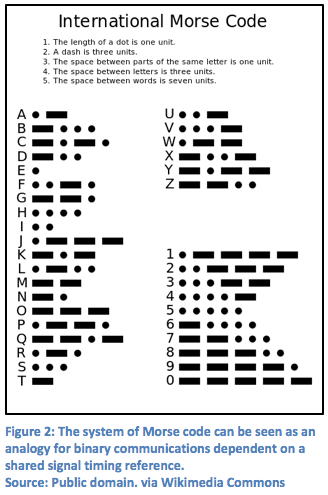

To better explain the difficulty, consider the 19th-century communication technology of Morse code. Based on a series of on/off electrical pulses, the system of representing text characters by long dashes and short dots is not unlike a binary system that employs ones and zeros. Morse code was designed so that the length of each character is an approximate inverse of how frequently it appears in the English language — the most common letter, E, is represented by a single dot; two dots represent the letter I; three dots represent the letter S; and so on.

As long as both the transmitter and the receiver possess a shared understanding of the timing of the signal, the code can be correctly interpreted. But suppose the code were to be transmitted at a different pace than the receiver interprets it: What was meant as two Es might instead by misinterpreted as a single I, or what is meant as an S might be misinterpreted as three Es.

One way that modern communication interfaces can solve this problem is to include an additional data stream on the transmitting end to serve as a “clock.” The clock is a steadily-timed, alternating on/off signal that serves as a reference for the frequency of the data being transmitted. It effectively instructs the receiver to interpret the signal at a specific pace.

Only trouble is, many high-speed data streams are sent without an accompanying clock signal, allowing them to be faster, use less power, be subject to less interference and so on. In order to reliably interpret the data being transmitted, therefore, the receiver must regenerate the clock. To do so, an approximate frequency is used to generate a reference clock, which can then be phase-aligned to transitions in the incoming data stream. The clock is then said to be “recovered.” And once the clock is recovered, the data can be recovered as well, generating a bit stream by sampling the clock along with the incoming data.

This combination of clock and data recovery is known as CDR, and it is a key component of optical communication systems.